V Sanjay's Domain Report (Level 2)

14 / 7 / 2024

Task 1 - Naive Bayesian Classifier

Understanding the Naive Bayesian Classifier

The Naive Bayesian Classifier is a probabilistic machine learning model based on Bayes' Theorem, with the "naive" assumption that features are independent given the class label. Despite its simplicity and the often unrealistic assumption of feature independence, the Naive Bayesian Classifier works surprisingly well for many real-world tasks, especially in text classification.

Text Classification with Naive Bayes & Golf Dataset Classification with Naive Bayes

Implementation (GitHub)

Task 2 - Decision Tree based ID3 Algorithm

Introduction

The ID3 (Iterative Dichotomiser 3) algorithm is a fundamental algorithm used in machine learning to create decision trees. Developed by Ross Quinlan in 1986, ID3 is based on the concept of information theory and entropy. This algorithm is primarily used for classification tasks, where the goal is to predict the class label of instances based on various features.

ID3 Algorithm Overview

- Entropy: Measures the uncertainty or impurity in a dataset. It is used to quantify the amount of randomness in the data.

- Information Gain: The reduction in entropy after splitting a dataset on a feature. The feature that provides the highest information gain is selected for splitting.

- Decision Tree Construction: The ID3 algorithm recursively selects the feature with the highest information gain to build the decision tree. The process continues until all features are used or the data is perfectly classified.

Task 3 - Ensemble techniques

Ensemble techniques in machine learning involve combining multiple models to improve the overall performance of a predictive task. Instead of relying on a single model, ensemble methods leverage the strengths of multiple models to reduce errors, increase accuracy, and enhance generalization to new data.

Types of Ensemble Techniques

Bagging (Bootstrap Aggregating):

- Bagging involves training multiple models independently on different random subsets of the data and then averaging their predictions (for regression) or taking a majority vote (for classification).

Boosting:

- Boosting is a sequential technique where models are trained one after another, with each new model focusing on correcting the errors made by the previous ones.

Stacking:

- Stacking involves training multiple different types of models (e.g., decision trees, logistic regression, SVM) and then training a meta-model to combine their predictions.

Task 4 - Anomaly Detection

Introduction

Objective: To detect anomalies in a heart attack dataset. Anomalies in this context could represent unusual patterns in patient data that may indicate rare or critical conditions. This can be useful for identifying outliers that may require further medical attention or investigation.

Dataset Used:

- Name: Heart Attack Dataset

- Description: The dataset contains various features related to patient health and medical history, including age, sex, chest pain type, resting blood pressure, cholesterol levels, fasting blood sugar, and electrocardiographic results.

Task 5 - Random Forest, GBM and Xgboost

Random Forest is an ensemble learning algorithm used for both classification and regression tasks. It works by creating multiple decision trees during training and aggregating their results for prediction.

- Understanding: Random Forest leverages bagging (Bootstrap Aggregation) to reduce overfitting and improve accuracy. Each tree is trained on a random subset of the data, and its predictions are combined (usually through voting for classification or averaging for regression).

- Implementation: GitHub Link

Gradient Boosting Machine (GBM) is an ensemble technique that creates sequential trees, where each tree aims to correct the errors of the previous ones. GBM uses boosting, which combines weak learners to form a strong learner by minimizing the loss function.

- Understanding: In GBM, the model is trained in a stage-wise fashion, where each new tree is fitted to the residuals (errors) of the previous trees to reduce overall error.

- Implementation: GitHub Link

XGBoost (Extreme Gradient Boosting) is an optimized version of GBM designed for speed and performance. It includes regularization to prevent overfitting, and parallelization for faster training.

- Understanding: XGBoost is built on the principles of GBM but with significant performance improvements. It introduces additional regularization terms to penalize complex models and reduce overfitting.

- Implementation: GitHub Link

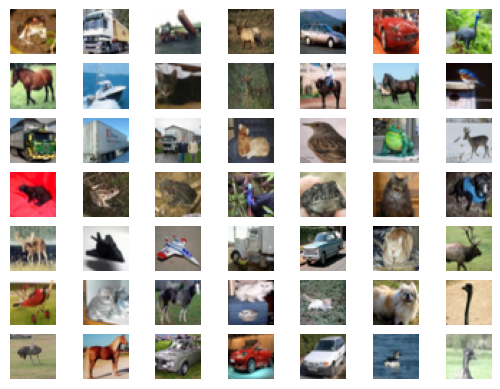

Task 6 - Image Classification using KMeans Clustering

KMeans Clustering works by:

- Initialization: Selecting

kinitial centroids randomly from the dataset. - Assignment Step: Assigning each data point to the nearest centroid based on a distance metric (typically Euclidean distance).

- Update Step: Recalculating centroids by averaging the positions of the assigned data points.

- Iteration: Repeating the assignment and update steps until convergence, where the centroids no longer change significantly.

Key Features

- Unsupervised Learning: KMeans does not require labeled data for training.

- Scalability: It can handle large datasets efficiently.

- Simplicity: The algorithm is easy to implement and understand.

Steps to Implement KMeans Clustering

- Data Preprocessing: Load and preprocess the MNIST dataset.

- Feature Extraction: Flatten images to convert them into a suitable format for clustering.

- KMeans Algorithm: Apply the KMeans algorithm to classify images.

- Visualization: Visualize the clustered results.

- Implementation: GitHub Link

Task 7 - Hyperparameter Tuning

Hyperparameters are configuration settings used to control the training process of machine learning models. Unlike model parameters learned during training, hyperparameters are set before the learning begins. Examples include:

- Learning Rate: The step size for updating model parameters.

- Number of Trees: In ensemble methods like Random Forest, the number of trees to build.

- Maximum Depth: Limits the depth of trees to prevent overfitting.

- Implementation: GitHub Link

Task 8 - Table Analysis Using PaddleOCR

Optical Character Recognition (OCR) is a technology that converts different types of documents, such as scanned paper documents, PDFs, or images, into editable and searchable data. PaddleOCR is an efficient OCR toolkit that provides comprehensive solutions for extracting text and structured data from images, particularly tables. This report outlines the implementation of a pipeline to detect and analyze tabular data using PaddleOCR, including statistical computations and data visualization.

Once the data is extracted, we can analyze it using Pandas. This can include operations such as calculating averages, sums, and other statistical measures.

import pandas as pd

# Assuming extracted data is in a tabular format

df = pd.DataFrame(table_data, columns=['Column1', 'Column2', 'Column3'])

# Perform statistical computations

mean_values = df.mean()

print("Mean Values:\n", mean_values)

# Example of other statistical computations

summary = df.describe()

print("Statistical Summary:\n", summary)

Task 9 - PDF Query Using LangChain

The architecture of the PDF Query System is composed of the following components:

- PDF Text Extraction: Uses

PyPDF2to extract textual content from PDF files. - Vector Store: Implements FAISS (Facebook AI Similarity Search) for efficient retrieval of relevant excerpts.

- Question-Answering Model: Utilizes Hugging Face’s Transformers for interpreting user queries and generating answers.

- Retrieval Chain: Integrates the vector store with the question-answering model to form a cohesive system.

- Implementation: GitHub Link

Task 10 - Generative AI Task Using GAN

The GAN architecture comprises two main components:

- Generator: This network takes random noise as input and generates synthetic images. It uses transposed convolution layers to upscale the input into a higher-dimensional space.

- Discriminator: This network evaluates the authenticity of the images, distinguishing between real (from the dataset) and fake (generated) images. It consists of convolutional layers that reduce the dimensionality of the input images.

- Implementation: GitHub Link