LEVEL-3 REPORT

29 / 3 / 2025

TASK 1 : Decision Tree based ID3 Algorithm

In this task I implemented the ID3 Decision Tree algorithm from scratch in Python, utilizing concepts such as entropy, information gain, and recursive tree construction. My implementation consists of a Node class to define tree nodes and a DecisionTree class that handles tree growth based on the best feature splits. The model selects the optimal splits by calculating information gain and recursively builds the tree until a stopping condition is met. I trained the model on the Breast Cancer dataset from sklearn.datasets, split the data into training and test sets using an 80-20 ratio, and tested its performance. The model achieved an accuracy of 91.23% on the test set, demonstrating its effectiveness in classification tasks.

Github link: https://github.com/umeshsolanki2005/Decision-Tree-ID3.git

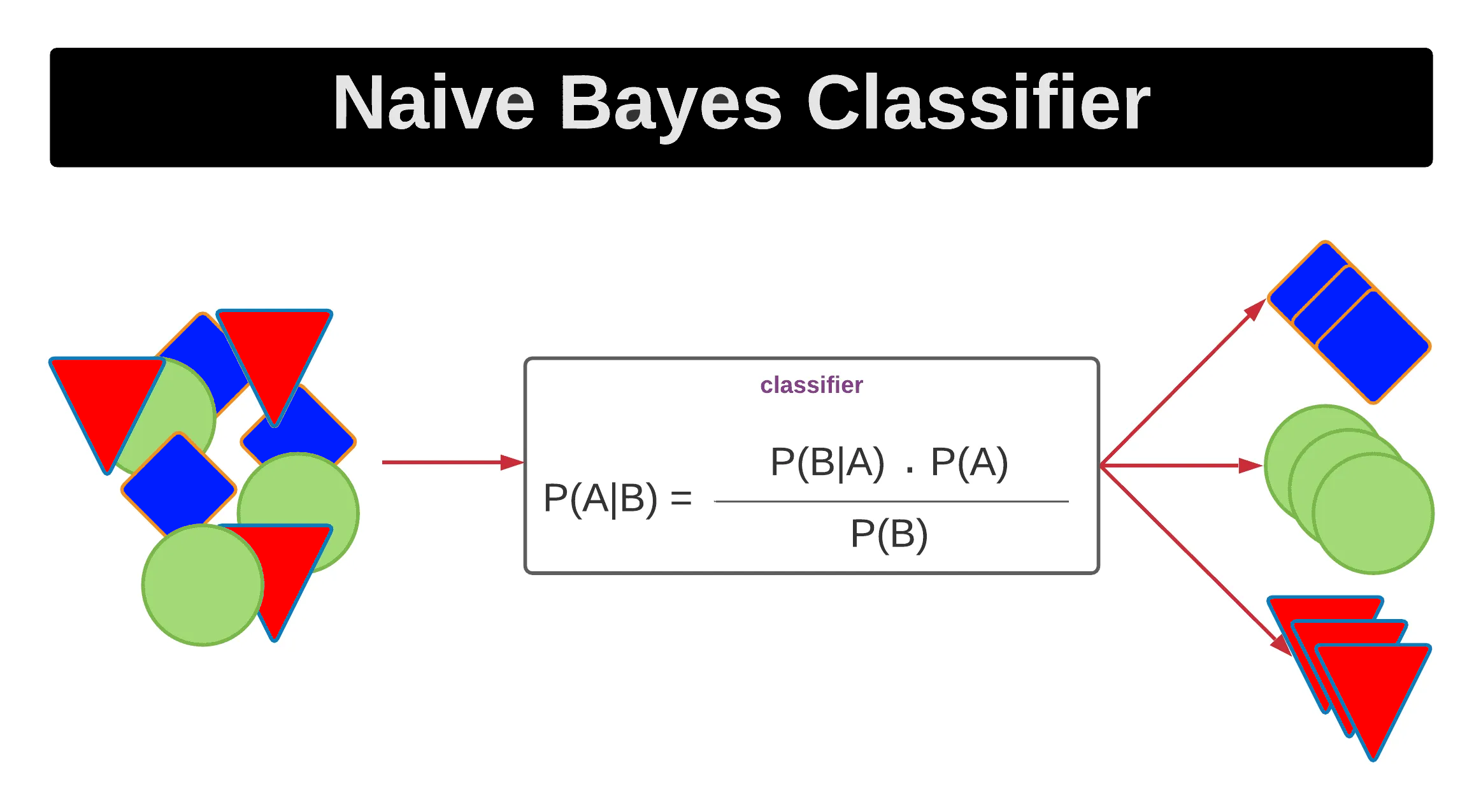

Task 2 - Naive Bayesian Classifier

I implemented a Naive Bayes classifier for spam detection using the Multinomial Naive Bayes model from sklearn. The dataset (spam.csv) contains text messages labeled as spam or ham, which were converted into binary values (1 for spam, 0 for ham). After splitting the data into training and testing sets, I used CountVectorizer to transform text into numerical features and trained the model. The classifier achieved an accuracy of 98.06% on the test set, demonstrating the effectiveness of Naive Bayes in text classification tasks.

Github link: https://github.com/umeshsolanki2005/Naive-Based-Classifier.git

Task 3 - Ensemble techniques

Ensemble techniques in machine learning combine multiple models to improve predictive performance and reduce overfitting. Instead of relying on a single model, ensemble methods aggregate the predictions of multiple base models, leading to more robust and accurate results. Common ensemble techniques include Bagging (e.g., Random Forest), Boosting (e.g., AdaBoost, Gradient Boosting), and Stacking (layering different models). For this task, I implemented an ensemble learning approach using the Voting Classifier on the Titanic dataset. The dataset was preprocessed by handling missing values and encoding categorical features. I selected Decision Tree, Logistic Regression, and K-Nearest Neighbors (KNN) as the base classifiers and combined them using hard voting, where the majority decision among classifiers determines the final prediction. The trained model achieved an accuracy of 90.8%, demonstrating the effectiveness of ensemble learning in improving classification performance.

Github link: https://github.com/umeshsolanki2005/Ensemble-technique.git

Task 4 - Random Forest, GBM and Xgboost

Random Forest, Gradient Boosting (GBM), and XGBoost are powerful ensemble learning techniques used for classification and regression tasks.

- Random Forest is an ensemble method that builds multiple decision trees and combines their predictions through majority voting (for classification) or averaging (for regression). It reduces overfitting and improves accuracy by training each tree on a random subset of the data.

- Gradient Boosting (GBM) is a sequential ensemble method that builds decision trees iteratively, with each new tree correcting the errors of the previous ones. It minimizes the loss function and improves performance over time, making it effective for complex datasets.

- XGBoost (Extreme Gradient Boosting) is an optimized version of GBM that improves training speed and accuracy using advanced regularization techniques and parallel processing. It is widely used in machine learning competitions due to its efficiency and predictive power.

Github link: https://github.com/umeshsolanki2005/RandomForest-GBM-Xboost.git

Task 5 - Hyperparameter Tuning

Hyperparameter Tuning and Its Application on the Iris Dataset Hyperparameter tuning is the process of optimizing the parameters that control the learning process of a machine learning model. Unlike model parameters (like weights in linear regression), hyperparameters are set before training and influence how the model learns from the data. Examples include the number of trees in a Random Forest, the learning rate in gradient boosting, and the number of layers in a neural network. In this task, Random Forest Classifier was applied to the Iris dataset, and Grid Search Cross-Validation (GridSearchCV) was used for hyperparameter tuning. A hyperparameter grid was defined with various options for parameters like n_estimators, max_depth, min_samples_split, min_samples_leaf, and max_features. The best hyperparameters were selected based on accuracy using 5-fold cross-validation.

Github link: https://github.com/umeshsolanki2005/Hyperparameter-Tuning.git

Task 6 : Image Classification using KMeans Clustering

This task focuses on image classification using K-Means clustering on the MNIST dataset, which contains handwritten digits from 0 to 9. The dataset is first loaded using PyTorch and converted into NumPy arrays for easier processing. A visualization step is performed to display sample images, helping to understand their structure. K-Means clustering, an unsupervised learning algorithm, is then applied to group similar images based on pixel intensity patterns. Since the algorithm works without labeled data, it finds inherent patterns within the images and categorizes them into clusters. Finally, the results are analyzed by comparing the predicted clusters with the actual digit labels, demonstrating how unsupervised learning can be used for image classification and pattern recognition.

Github link: https://github.com/umeshsolanki2005/Image-Classification-K-Means-Clustering.git

Task 7: Anomaly Detection

Anomaly detection is a technique used to identify unusual or rare data points that deviate significantly from the normal pattern in a dataset, often indicating critical events such as fraud, defects, or security breaches. It can be performed using supervised learning, where labeled anomalies are used for training, or unsupervised learning, where patterns are identified without predefined labels. In this task, an Isolation Forest algorithm is applied to detect anomalies in a synthetic dataset consisting of two normally distributed features, with artificial anomalies introduced to simulate unusual behavior. The dataset is first standardized using StandardScaler, then processed through Isolation Forest, which assigns an anomaly score to each data point. Finally, a scatter plot is used to visualize the anomalies, where deviating points are highlighted, demonstrating how this method effectively identifies outliers in data streams.

Github link: https://github.com/umeshsolanki2005/Anomaly-Detection.git

Task 8: Generative AI Task Using GAN

This task involves developing a Generative Adversarial Network (GAN) to generate realistic images using the MNIST dataset. A GAN consists of a generator, which creates synthetic images, and a discriminator, which classifies them as real or fake. The networks compete against each other, improving image quality over time. The dataset is normalized and processed using a TensorFlow-based model. The generator transforms random noise into images using transposed convolutions, while the discriminator employs convolutional layers to classify them. The model is trained with the Adam optimizer and binary cross-entropy loss for 50 epochs. Finally, the trained GAN generates synthetic images, showcasing the power of deep learning in image synthesis and data augmentation.

Github link: https://github.com/umeshsolanki2005/Generative-AI-GAN.git

Task 9: PDF Query Using LangChain

This task involves developing a PDF query system using LangChain and Ollama to extract relevant information from documents based on user queries. The system loads and parses a PDF, extracts text using PyPDFLoader, and splits it into smaller chunks with RecursiveCharacterTextSplitter for efficient retrieval. The extracted text is then embedded using HuggingFaceEmbeddings and stored in a FAISS vector database for quick search and retrieval. A RetrievalQA chain is implemented with Ollama's LLM (Llama 3.2) to interpret user queries and fetch relevant document sections. When queried about "basic human aspirations," the system successfully retrieved and generated a meaningful response based on the context. This approach enhances document understanding, efficient searching, and AI-driven knowledge retrieval, making it useful for research and automated document analysis.

Github link: https://github.com/umeshsolanki2005/PDF-QUERY.git

Task 10: Table Analysis Using PaddleOCR

This task utilizes PaddleOCR to extract and analyze tabular data from images or scanned documents. The pipeline detects tables, extracts text with confidence scores, and processes the data using pandas. The extracted data undergoes statistical analysis and is visualized using Matplotlib and Seaborn. When tested on a sample image, the system successfully extracted 89 text entries with high confidence (average 0.99). The implementation enables efficient table recognition, data extraction, and analysis, making it valuable for document automation, digitization, and research applications.

Github link: https://github.com/umeshsolanki2005/Table-Analysis.git